This is a question I regularly discuss with governors and senior leaders during training sessions on ‘understanding school performance data’. What is the data we collect about our pupils’ attainment and progress, and the analysis we produce actually for? To prove we are good schools to Ofsted? To predict our results for next year? To keep our governors happy? For teacher performance management? No! If the collection and analysis of assessment data isn’t leading to impact in the classroom, and enabling improvement in attainment and progress for individual students there is no point in collecting or analysing it.

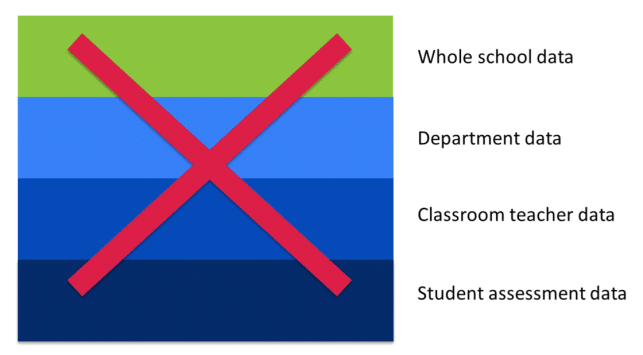

So which pupil data should we be looking at? I believe that instead of constantly worrying about the headline figures, the focus should be firmly on the subjects, the classes and the individual students. In the same way that if we look after the pennies the pounds will look after themselves, if we look after the students and departments, the overall performance measures will look after themselves. We need to focus on the small data, rather than the big data.

The questions schools leaders could be asking, for example, could be:

- Where are our weaknesses and areas for development?

- How do our subjects compare one to another?

- How much does low level disruption affect progress?

- What are the key factors for students making less progress than their peers?

- How are our PP, SEND, Looked after children, ethnic minorities progressing?

- What group of students should be our main focus for intervention?

- Do we have individuals who need extra support?

- Are August born students at a disadvantage?

- Are we challenging our high prior attainers enough?

- Are our existing interventions working? If not, what are we changing?

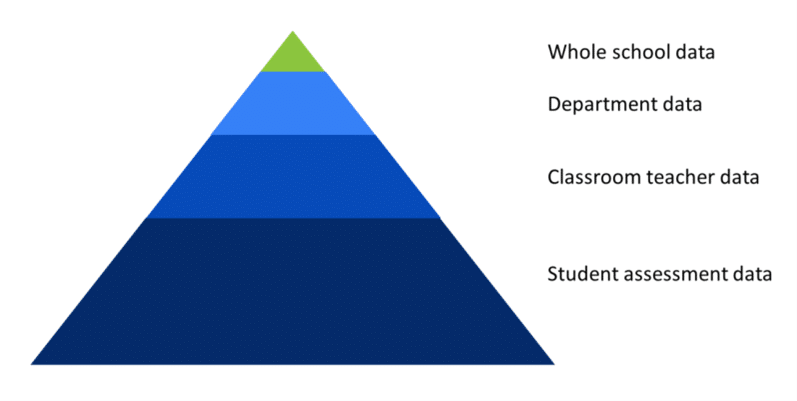

For me, the ideal structure of assessment data is bottom up – what you collect at a whole-school level should be as minimalistic as possible. Senior leaders should be able to use it to ask questions of subject or phase leads, subject or phase leads of teachers, and teachers of students, drawing on a greater wealth of information at each level.

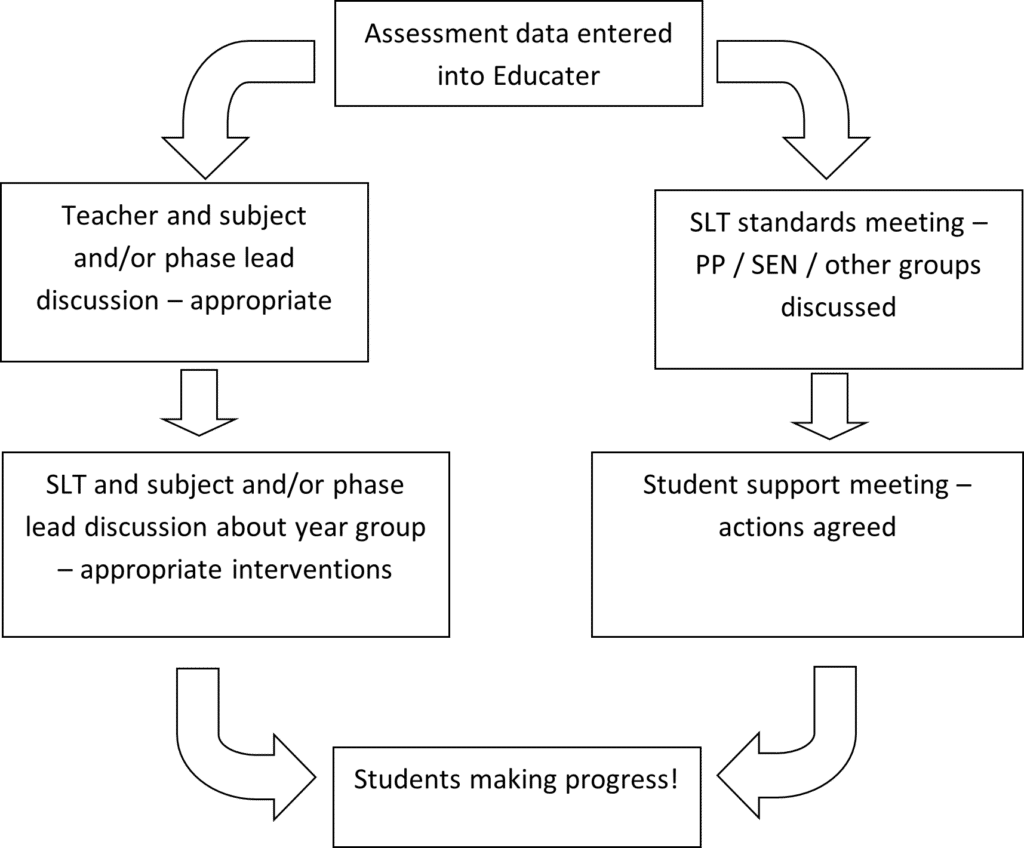

This is where what I call the ‘Informing Improvement’ plan comes into play, a regular programme of discussion and action. After each assessment point, Educater will be opened up, and the teacher will be expected to discuss the data they can see, and what it tells them about the pupils in their class, together with the subject or phase lead. Discussion could be centred around a short questionnaire, but the point of the meeting is to identify problem areas and formulate a plan of action and intervention. Subject or phase leads then take this intervention plan to their SLT for agreement.

At the same time, the SLT can be doing their own investigation. Educater makes it very easy to look at how different groups are performing, either against age related expectations or across time. It would be the SLT’s job to look at any high-risk groups in their school and check progress, again formulating a plan for student support where relevant and necessary.

In my work with schools I have often come across resistance to data analysis at classroom level. Perhaps teachers say:

- I don’t know what it’s used for

- It’s just paperwork and admin that gets in the way of teaching

- I’m not a ‘data person’, I don’t like numbers and figures

- I haven’t time to look at it.

However, with the help of the easy to access and comprehensive reports in Educater, we can aim to move towards:

- I am careful about my assessments because I understand that they inform intervention and action not only in my subject but across the board.

- It supports my teaching by helping me understand where there are strengths and weaknesses, and helps me formulate a plan of action

- The data is intuitive, and tells me so much about my pupils, my class and my qualification

- I don’t just enter data, I use it.

If everyone ‘buys in’ to the process then we can be sure that looking at the data will, in fact, lead to the impact in the classroom that we are looking for.

About the author

Becky St.John

Becky is a school assessment and performance data specialist, who is passionate about helping schools and multi academy trusts use data effectively to improve outcomes. She started out as data manager in a large secondary school, and this eventually led to a role as principal consultant for SISRA Ltd, supporting 1,600 schools across the UK in the management and analysis of their assessment data and exam results through consultancy and training.